Accuracy and Percision

Accuracy is about overall correctness.

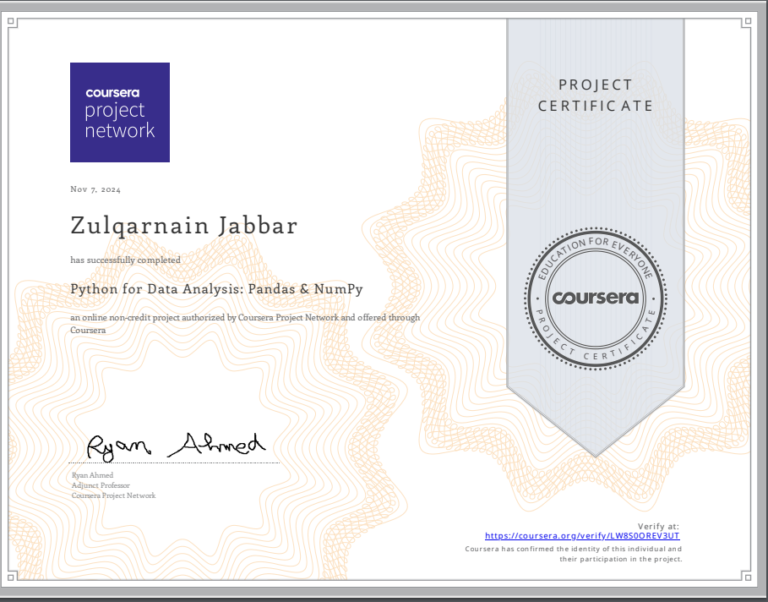

— Zulqarnain Jabbar (@Zulq_ai) January 15, 2025

Precision is about the reliability of positive predictions.

To delve deeper, let’s consider accuracy and precision in the context of binary classification, where the goal is to predict one of two classes—often labeled as “positive” and “negative.”

Accuracy: Overall Correctness

Accuracy is a simple ratio that tells you how often the model is right overall.

- True Positives (TP): Cases where the model correctly predicted the positive class.

- True Negatives (TN): Cases where the model correctly predicted the negative class.

- False Positives (FP): Cases where the model incorrectly predicted the positive class (the actual class was negative).

- False Negatives (FN): Cases where the model incorrectly predicted the negative class (the actual class was positive).

In practical terms:

- If accuracy is high, it means that the model correctly labels a large proportion of the dataset.

- However, accuracy can be misleading if the dataset is imbalanced. For example, in a dataset where 90% of instances are of the negative class, simply predicting “negative” every time would give 90% accuracy, despite failing to identify the positive class at all.

Precision: Quality of Positive Predictions

Precision gives insight into how “pure” the positive predictions are. It answers the question:

“Of all the instances that the model predicted as positive, how many were actually positive?”

- True Positives (TP): Correctly identified positive instances.

- False Positives (FP): Instances incorrectly labeled as positive.

In practical terms:

- If precision is high, it means that the model rarely claims something is positive unless it is truly positive.

- Precision is especially critical in situations where false positives are costly. For instance, if a model predicts whether an email is spam, a false positive (classifying a legitimate email as spam) could mean missing an important message. In such cases, high precision ensures that “spam” predictions are more reliable.

How They Relate and When to Focus on Each

- Accuracy is useful when:

- The dataset is balanced.

- The cost of false positives and false negatives is similar.

- You need a quick, broad measure of model performance.

- Precision is useful when:

- The positive class is relatively rare (imbalanced datasets).

- The cost of false positives is high.

- You need a model to make as few incorrect positive predictions as possible.

In essence:

- Accuracy is about overall correctness.

- Precision is about the reliability of positive predictions.

By understanding the nuances and trade-offs, you can choose the metric that best reflects the priorities of your particular machine learning application.